How not to be an idiot

Mental models for catching yourself being wrong

Last time we covered six mental models for thinking clearly: how to think through consequences, see systems, understand people, and avoid false binaries.

Those are active frameworks. You apply them when you’re making decisions or navigating conflict.

But even with the best thinking tools, your brain still sabotages you. Mine does it constantly. It over-weights vivid events. It follows crowds without checking if they’re right. It rewrites history to make us feel smarter. It curates information to confirm what we already believe.

We can’t think our way out of these. They’re hardwired. The only fix is catching them before they cost us.

These are cognitive biases: systematic errors in how you process information and make decisions. Everyone has them. Smart people, dumb people, you, me, everyone.

Intelligence doesn’t protect you. If anything, it makes you better at rationalizing the bias after the fact.

The goal isn’t to eliminate biases. You can’t. The goal is to recognize the pattern early enough to question it.

Recognizing your biases

Your brain lies to you. Catch it before it costs you.

Availability bias

You over-weight recent or memorable events. If something just happened or was dramatic, you think it’s more common than it actually is.

Your friend got food poisoning from a restaurant. Now you won’t eat there. They serve thousands of meals safely every week. Your friend had one bad experience. But that one vivid story (your friend getting sick) outweighs all the unremarkable safe meals. The restaurant hasn’t changed, but your perception of the risk has.

You saw a viral post about AI screwing up, giving terrible advice, confidently hallucinating wrong answers. Now you think AI is completely unreliable. Meanwhile, you’ve used AI-powered tools successfully all day: Google search found what you needed, autocorrect fixed your typos, Netflix recommended something you actually liked, spam filter caught the junk. Dozens of quiet successes. One dramatic failure. Which one shapes your perception? Your brain curates content like a tabloid editor. Drama gets the headline. Everything working as expected doesn’t.

Someone got fired at your company. Suddenly everyone thinks they’re next. People are updating resumes, looking at job boards, whispering about layoffs. Reality: one person got fired for performance issues, it happens once a year, your job is fine. But that one firing is vivid and recent, so it feels like the start of mass layoffs. The fear isn’t proportional to the actual risk. One dramatic event rewrites the whole narrative. One hundred boring days of nothing happening? Your brain already forgot those.

There was one incident at a party where some young people got into a fight. Now everyone in the neighborhood thinks all parties with young people will end in a brawl. The community meeting is full of people saying “we need to ban these parties.” Reality: it’s the first incident in years. Hundreds of parties happened without problems. But that one incident is what everyone remembers.

We do this with everything. One plane crash on the news, suddenly flying feels dangerous (even though you drive every day, which is statistically riskier). One break-in in the neighborhood, suddenly the whole area “isn’t safe anymore” (even if it’s the first incident in five years). One friend’s startup failed, suddenly all startups feel like bad ideas.

The pattern: recent and vivid beats frequent and boring. One dramatic story outweighs a thousand unremarkable data points.

Your brain isn’t trying to be accurate. It’s trying to be efficient. Vivid memories are easy to recall. Boring, uneventful patterns are hard to remember. So when you ask yourself “is this risky?” your brain answers based on what’s easiest to remember, not what’s actually true.

The fix isn’t to ignore recent events. It’s to ask: “Is this one event representative, or is it an outlier? What does the actual data say? What’s my sample size?” One firing doesn’t mean layoffs are coming. One bad party doesn’t mean all parties are dangerous. One hallucination doesn’t mean AI is useless.

Check whether you’re reacting to a pattern or reacting to the most memorable example.

Spoiler: it’s usually the memorable one. Our brains are terrible at statistics but excellent at remembering drama.

Your brain tricks you with vivid memories. It also tricks you with crowds.

Bandwagon effect

You do something because everyone else is doing it, without questioning whether it makes sense for you.

Everyone’s investing in crypto. Your coworkers are talking about it, your friends are making money (or saying they are), it’s all over the news. So you invest. You don’t understand what blockchain actually is. You can’t explain how it works. You’re not sure why this specific coin is valuable. But everyone else is doing it, so it must be smart. Then the market crashes and you realize you were following the crowd into something you never understood. You’ve just enrolled in the expensive school of crowd-following. Tuition: whatever you invested.

Everyone’s trashing someone online. You see the pile-on, read a few angry posts, and join in. You don’t know the full story. You haven’t verified the claims. You just saw everyone else doing it, so it must be deserved. The hate wagon feels righteous when you’re part of the crowd. Later you find out the story was more complicated, or wrong, or taken out of context. But the damage is done and you were part of it.

Everyone's watching Game of Thrones. I resisted for two years because I didn't want to be part of the mainstream hype. When I finally gave in, I realized I could have been enjoying it all along. The only upside to waiting? I got to binge-watch it. Which meant watching every obvious protagonist die in a single day instead of spread across weeks. It hurt. I was still doing bandwagon thinking, just the contrarian version. Resisting something just because everyone else is doing it is still letting the crowd make your decision.

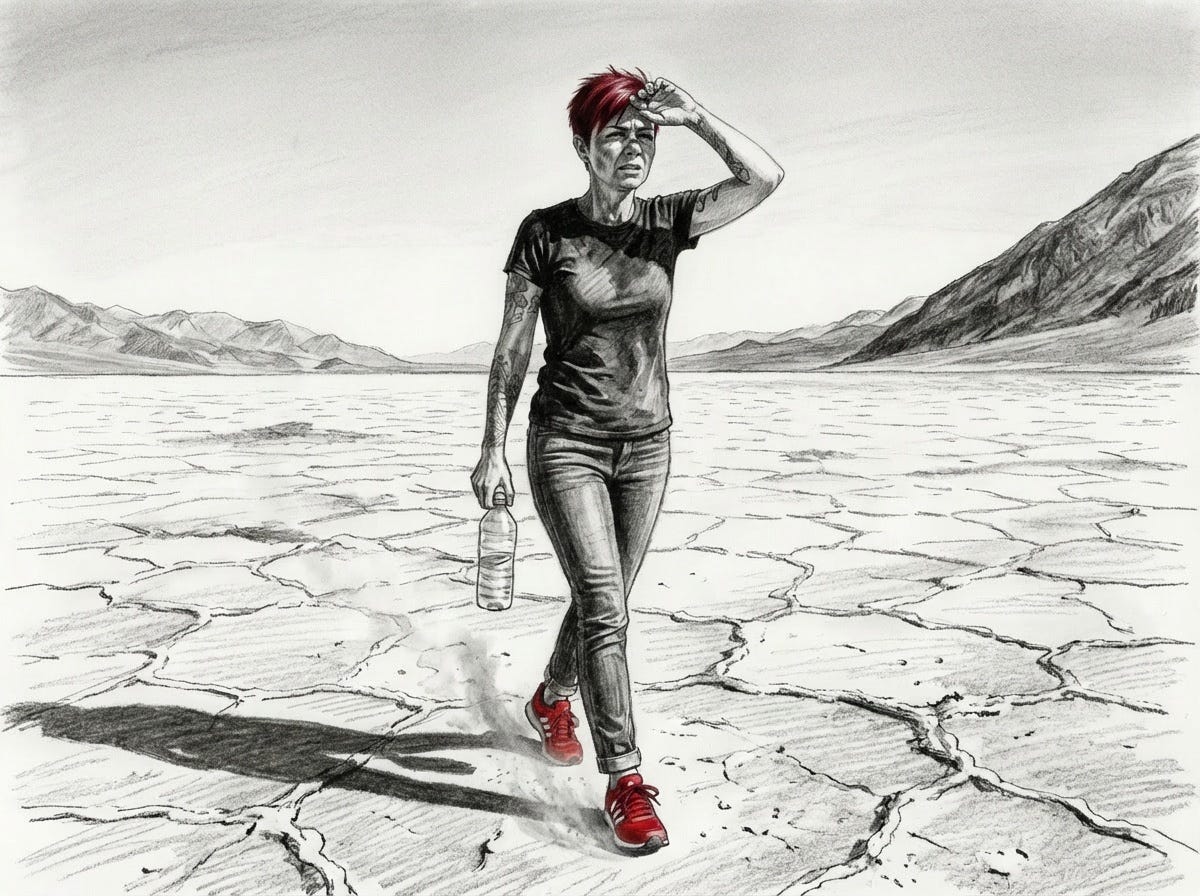

Everyone's saying "real writers don't use AI." I don’t have time to learn all the superior writing skills, but I have something to say and want to share it. For me as a non-native English speaker, writing is sometimes like walking through Death Valley. I have to get to the other side fast, but I can't, I'm dehydrated. But I have a flask of water in my hand: AI tools. Should I die in the valley and stay quiet? Torture my readers with clunky prose, slowly dragging my feet through the heat? Or take a sip now and then and keep moving faster? You tell me. If you're in the same spot, are you on a bandwagon of artificial purity, willing to die? Or are you prepared to ask yourself "would this tool actually help me?" Not everything is cheating. Not every puritanism makes sense.

The bandwagon works both ways. Following the crowd. Resisting the crowd just to be different. Either way, you’re letting everyone else’s behavior make the decision instead of thinking for yourself.

The pattern: popularity becomes the reason. “Everyone’s doing it” becomes the justification. You skip the part where you ask “does this make sense for me?”

The fix: pause before following. Ask: “If nobody else was doing this, would I still think it’s a good idea? What problem does this solve for me? Do I actually understand what I’m signing up for?”

Sometimes the crowd is right. Sometimes everyone’s adopting something because it genuinely works. But “everyone else is doing it” isn’t evidence. It’s just momentum. Check whether you’re making a decision or just following traffic.

Following crowds without thinking is one trap. Rewriting history to make yourself look smart is another.

Hindsight bias

After something happens, you convince yourself you knew it all along. Hindsight bias makes past events seem obvious, even though they weren’t obvious at the time.

Your friends break up. “I knew they weren’t right for each other.” But when they got together, you didn’t say anything. You didn’t warn anyone. You went to their wedding and smiled in the photos. Now that it’s over, you remember every red flag like you predicted this from day one. You’re rewriting history. The relationship had problems, sure. Most relationships do. But you didn’t “know” they’d break up. You just think you did because you know the ending.

Someone you admire launches a business. You’re a little envious. They’re doing something bold, and you’re watching from the sidelines. A year later, it fails. “I knew that wouldn’t work.” But when they announced it, you didn’t say that. You thought “maybe they’ll succeed and I’ll regret not doing something similar.” Now that it failed, you remember every flaw in their plan like it was obvious. You’re not smarter than them. You just have the ending, and the ending makes everything look predictable.

Hindsight bias is especially harsh on people you secretly envy. When they succeed, it stings. When they fail, you retroactively claim you saw it coming. It’s a way to protect yourself. If their failure was obvious, then you weren’t wrong to play it safe. You were smart. They were reckless. Except you didn’t think that at the time. You thought they were brave and you were stuck. Hindsight turns envy into retroactive wisdom. It’s easier than admitting you’re rewriting the story.

Your team loses the game. “I knew they’d choke under pressure.” Except before the game, you said they had a good chance. You wore the jersey. You hoped they’d win. After they lose, you remember every mistake, every missed opportunity, every sign they weren’t ready. It all seems obvious now. It wasn’t obvious when the game started. You’re experiencing the outcome and pretending you predicted it.

The problem isn’t that you feel smart. It’s that hindsight bias prevents learning. If you think you “knew it all along,” you don’t ask “what did I actually miss?” You don’t update your thinking. You don’t examine why you didn’t act on this supposed knowledge. You just rewrite history to make yourself the person who saw it coming.

This makes you overconfident about predicting the future. If everything seems obvious in hindsight, you start thinking you’re good at forecasting. Turns out those are different skills. Retroactive pattern matching doesn’t help you predict the future. One is useful. The other is a cognitive trap.

The fix: before the outcome is known, write down what you actually think will happen. When the outcome arrives, compare your prediction to what you claimed you “knew all along.” The gap between what you predicted and what you claim you predicted is hindsight bias. It’s humbling. It’s also the only way to get better at actually predicting things instead of just pretending you did.

Hindsight bias makes you feel smarter than you are. Filter bubble makes you feel more informed than you are.

Filter bubble

You only consume information that confirms what you already believe. Your social media feed shows you more of what you engage with. Your news sources align with your politics. Your friends think like you. Your professional circle shares your perspective. You think you’re well-informed. You’re actually seeing one slice of reality on repeat.

Your social feed is full of posts about how terrible X is. Everyone you follow agrees X is terrible. The algorithm shows you more anti-X content because that’s what you engage with. You see ten posts a day confirming X is the worst. You never see the reasonable defense of X, the nuanced take on X, or the data showing X isn’t as bad as your feed makes it look. You think the whole world agrees with you. Half the world doesn’t, you’re just not seeing them.

You only read news from sources that match your worldview. Conservative sources if you lean right. Progressive sources if you lean left. Tech publications that celebrate your favorite framework. Business outlets that confirm your management philosophy. You’re not getting news. You’re getting your existing beliefs reflected back at you with a “breaking news” banner - confirmation that you’re right, which feels great until reality has a different opinion. The information that contradicts your view exists, you’re just not consuming it.

You only hang out with people who think like you. Same industry, same role, same politics, same life stage, same worldview. When you ask for opinions, you ask people you know will agree with you. “Should I take this job?” You ask three friends who already think like you. They all say yes (or all say no). You feel validated. You didn’t get diverse perspectives. You got confirmation bias on steroids.

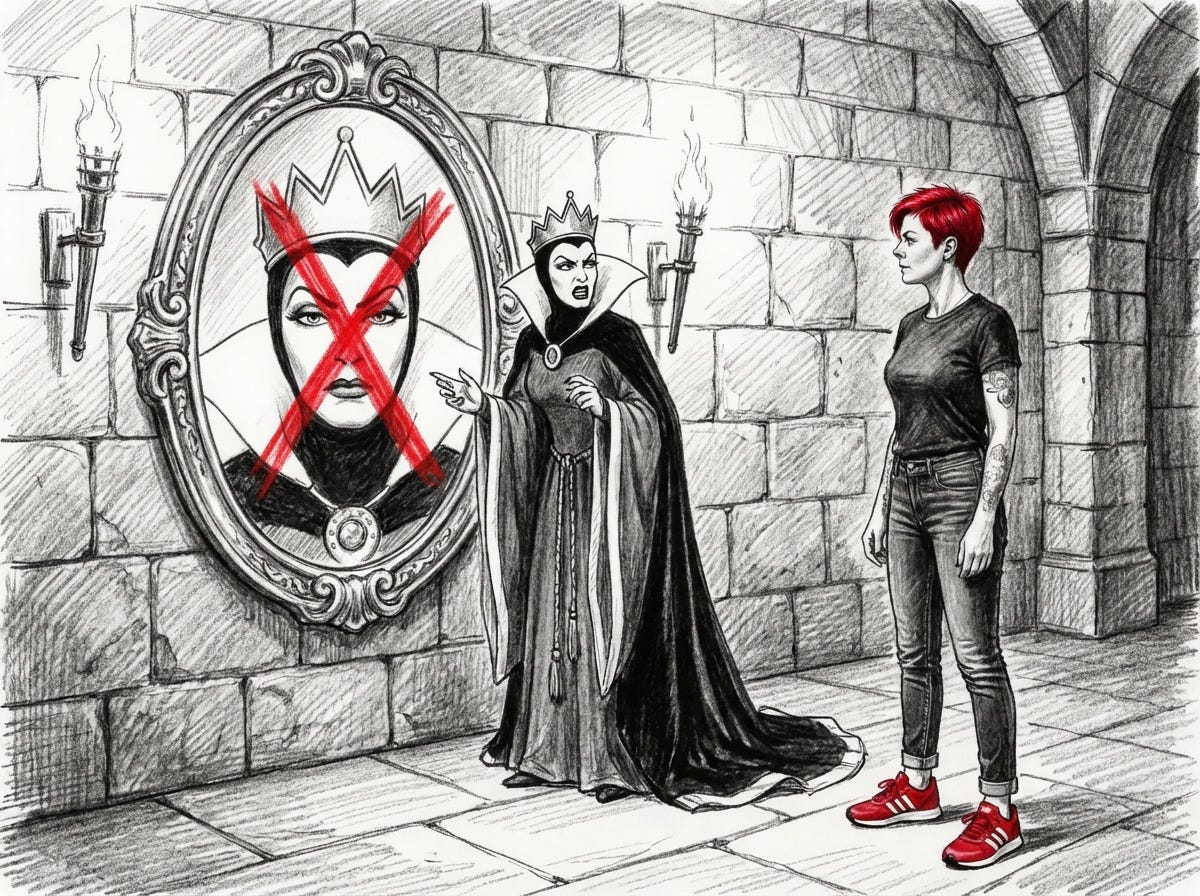

I’ve been intentionally building the opposite for years. I seek out friends who aren’t afraid to tell me I’m wrong. People who aren’t so fond of me that they’ll soften the blow. I need the mirror from Snow White — the one that told the Queen what she needed to hear, not what she wanted to hear. Turns out it’s easier to find than I expected. Plenty of people are happy to tell me I’m wrong. It’s uncomfortable. It’s also the only way to get actual feedback instead of validation disguised as advice.

The filter bubble makes you fragile. You’re not stress-testing your ideas against opposing views. You’re not encountering arguments that challenge your assumptions. When reality contradicts your bubble, you’re blindsided. “How did he win the election? Nobody I know voted for him.” Because your bubble isn’t representative. It’s comfortable, but it’s not reality.

The fix isn’t to consume everything. It’s to actively seek out perspectives that make you uncomfortable. Read one source that disagrees with you. Talk to someone outside your industry. Ask for opinions from people who might challenge you. Follow someone who thinks differently.

You don’t have to agree with opposing views. But you should know they exist, understand why people hold them, and be able to argue against them without caricaturing them.

The pattern: if everyone around you agrees all the time, you’re not informed. You’re insulated. Break the bubble before it breaks you.

Where to start

You don’t need to focus on all four. Start with the one that’s costing you the most right now.

Seeing patterns everywhere after one dramatic event? That’s availability bias. Ask: “Is this representative or just memorable?”

Following what everyone else is doing without questioning it? Bandwagon effect. Ask: “If nobody else was doing this, would I still think it’s smart?”

Convinced you saw it coming after the fact? Hindsight bias. Write down predictions before outcomes happen.

Everyone you talk to agrees with you all the time? Filter bubble. Actively seek out one perspective that challenges you.

Remember: the goal isn’t to eliminate biases, but to catch them early enough to question whether you’re reacting to reality or to the way your brain distorts it. Catch yourself before the bias makes the decision for you.

Mental models won’t make you smarter. They’ll make you wrong less often. Which, honestly, is better than being confidently wrong more often.

Start today. Pick one bias. Watch for it. Notice when it shows up. The rest will build from there.

Part of a series on mental models: how to be less wrong, not be an idiot, lead without breaking your team, and build things without losing your sanity.